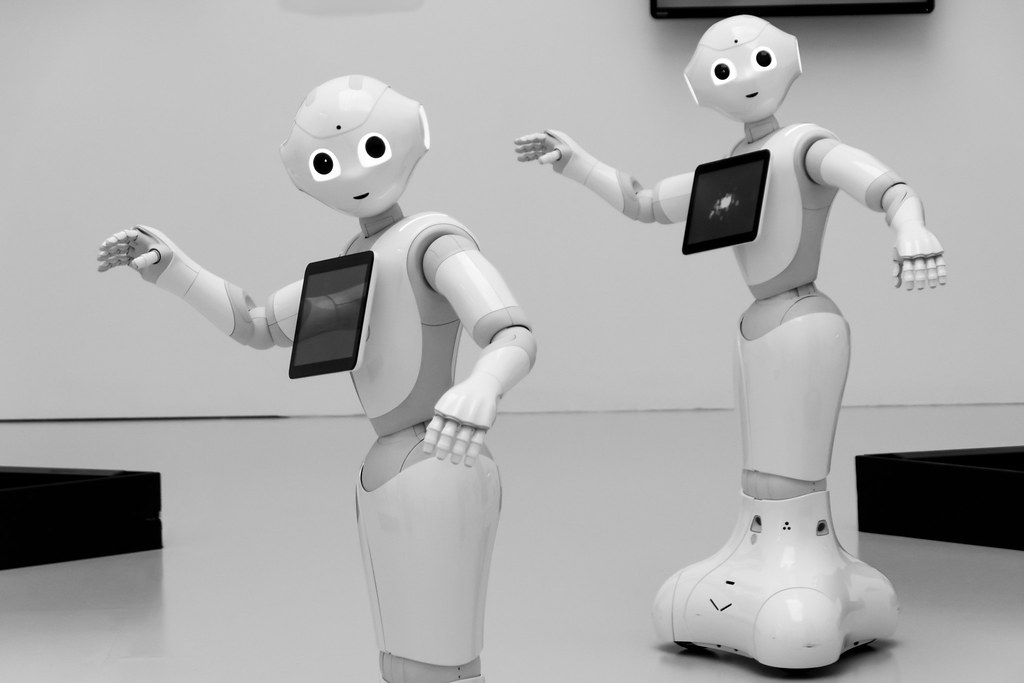

As artificial intelligence (AI) becomes more powerful and integrated into everyday life — from self-driving cars to virtual assistants to medical diagnostics — a fundamental question arises: Can machines have morals? And if they can’t, how do we ensure the decisions they make align with human values?

This is no longer a philosophical exercise. It’s a real-world challenge faced by scientists, developers, and ethicists. Building intelligent systems means teaching them how to make decisions — and that means teaching them right from wrong.

The Problem with Moral Machines

Machines don’t feel. They don’t have empathy, conscience, or cultural context. They operate on logic and data, not human experience. So how do we teach them morality?

At the heart of this issue lies the difficulty of defining what morality even is. Ethics can be subjective, vary by culture, and evolve over time. What one society sees as acceptable, another may see as deeply unethical. This makes it incredibly hard to hard-code ethics into software.

Real-World Examples of AI Ethical Dilemmas

1. Self-Driving Cars and the Trolley Problem

Imagine a self-driving car faced with a no-win situation: either hit a pedestrian who runs into the road or swerve and risk the life of its passenger. What should it do? Who should the AI prioritize?

There’s no single “correct” answer — but a decision must be made, and that decision must be programmed into the car’s logic.

2. AI in Hiring

Many companies use AI to screen resumes. But these systems often inherit the biases of their training data. If past hiring favored a certain gender or background, the AI might continue that bias, unintentionally discriminating against qualified candidates.

This raises serious questions about fairness, accountability, and transparency in automation.

3. Facial Recognition and Surveillance

AI-powered surveillance tools have raised alarms about privacy violations, especially when deployed without public consent or when accuracy drops across different ethnic groups. Should we use technology that might violate civil rights, even if it’s effective in preventing crime?

Can We Teach AI Morality?

Here are a few approaches being explored:

1. Rule-Based Ethics

This involves creating explicit rules for machines to follow — like Asimov’s famous “Three Laws of Robotics.” While helpful, rule-based systems often struggle with gray areas and unexpected situations.

2. Machine Learning with Ethical Data

Some researchers are attempting to train AI on large datasets of ethically judged decisions — teaching machines “what people would do” in a given situation. But again, if the data reflects bias or inconsistency, so will the AI.

3. Value Alignment

This approach focuses on aligning AI systems with human values — through feedback loops, human oversight, and ongoing learning. But the challenge remains: Whose values should AI follow? And how do we update them as society changes?

Why This Matters Now

As AI begins to autonomously make decisions in law enforcement, healthcare, transportation, and finance, the consequences of unethical behavior are no longer theoretical.

- An AI denying a loan based on flawed assumptions can devastate a family.

- A facial recognition error can lead to wrongful arrests.

- A biased hiring algorithm can cost someone their career.

We can’t treat ethics as an afterthought — it must be baked into the design of intelligent systems from the start.

Who’s Responsible?

The responsibility of ethical AI doesn’t fall solely on machines — or even on developers alone. It’s a shared responsibility:

- Developers must understand the ethical implications of their work.

- Companies must prioritize fairness and transparency over speed or profit.

- Governments must regulate and provide clear guidelines.

- Society must stay informed and demand accountability.

Final Thoughts

Can machines have morals? Not in the way humans do. But they can be designed to make ethically informed decisions — if we’re willing to put in the effort.

Programming ethics into AI is one of the most complex, urgent, and human challenges of our time. It’s not just about making smarter machines — it’s about ensuring that, as they grow more powerful, they serve us wisely and justly.